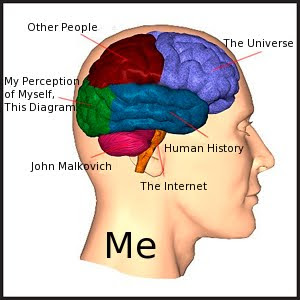

The prelim exam is a two day test, covering everything that I should have learned in 4 years as an undergrad and in my first year as a graduate student in physics. So: Electricity and Magnetism, Special Relativity, Classical Mechanics, Quantum Mechanics, Statistical Mechanics, oh, and lots of math. All of the Ph.D. candidates have to pass the preliminary to continue on in the program, we generally get two shots in case we don't pass the first time. So about the last month of the summer I spent trying to be prepared for the exam. More than once during the process I was struck with the impression that I had learned too much, that the sum of human knowledge was too great, and that maybe solipsism would be a more tractable philosophy.

My New Theory of Everything:

Back to the point. The prelim exam kind of sucks, and it certainly isn't the best way to test our propensity in physics, but I guess I made some connections while studying. And--yes--I did pass, so that's what matters at this point.

So the day after the prelim was over classes started--as a grad student, I do miss having long breaks of not having to do anything school related. This term I'm taking Gravitation (General Relativity) which I think will be a fun course.

I have a light course-load because I've found a professor who actually has some extra grant money lying around and is willing to give me some of it to help him do his research. This part of grad school, I've found, is not always as easy as it sounds. Anyway, the professor that I'm working for has been researching granular materials, particularly their critical point where they transition between being liquid-like and being solid-like.

Starting out with a research group can be slow, and my first simulation seems to be... inconclusive at this point. But I can tell you about one aspect of my work that has been interesting. That has been learning how to do general-purpose computing on graphics processing units (GPGPU). So what does this mean? A GPU is the primary component of the graphics card in your computer which carries out many of the computations necessary for displaying graphics on your screen. Compare this to the CPU (C for Central) which carries out all of the other instructions from the programs running on your computer. What you may not know about your CPU is that while your task manager or activity monitor tells you that you are running many processes, each of which are in turn running several threads (a single set of instructions) all at the same time, your CPU can only actually handle one set of instructions at a time. So it actually cycles through all of the threads, but does so so quickly that to you, your iTunes music plays simultaneously while you type your paper and search for distractions on the internet. (Many computers now have dual, quad, or multi-core processors where each core actually can run threads simultaneously with each other). What makes a GPU special is that it has hundreds of cores and can run at least that many threads at once. This was originally useful for computer graphics, especially in video games, where, for example, you might have some 3D object which you could represent with a large array of data. Then in order to figure out how to display the object on the screen, how to light it, etc., you need to perform some calculations on the entire array of data. While GPU's generally can't do a single calculation as fast as a CPU can, their ability to do many calculations in parallel can make them much faster at this type of calculation. So these types of calculations are offloaded onto the GPU greatly increasing the overall speed of the program and allowing programmers to make more detailed graphics.

Of course, computers are being used to handle very large sets of data for many more reasons today, including research. So it is only natural that researchers and others would want to get in on the power of graphics processors. One company that has made that possible is NVIDIA with their CUDA architecture, which is what I've been learning to use. They created a library extension to the commonly used C++ programming language that can be used with any of their graphics cards in production today. Learning to write programs in parallel has been interesting and difficult at times. I have to worry about things like two threads accessing the same memory which can make algorithms to do simple things much more complicated. But I'm finally getting the hang of it and pretty soon we'll see what kind of a speedup it can have on our programs. Anyway, I think it's kind of interesting stuff and it's a pretty safe bet that computers and computer programs will increasingly rely on massively parallel processors like GPU's in the future.

Finally, I realize that got kind of long and I don't know if it's really interesting to everyone else, so I'll leave you with the youtube video I've been enjoying this weekend:

Someone needs to mash this up into a debate between Phil Davidson and Basilmarceaux.com. I smell youtube gold! Get on that internet.

That video is hilarious and filled with potential.

ReplyDeleteHere is a subpar remix:

http://www.youtube.com/watch?v=RGFi3sbCzG4

I think the remix with the transformers trailer music is actually pretty good. Simple, but good.

ReplyDeletethis video is redic. i'm laughing out loud right now.

ReplyDelete"i will hit the ground running,

come out swinging,

and end up winning."

he's so inspirational

I think he prepared for his speech by watching old Chris Farley skits on SNL. At least that's what it reminds me of.

ReplyDelete